Large Language Models for Medicine: A Look at Med-PaLM

This Medium article will explore a recent advancement in applying large language models (LLMs) to the medical domain, specifically focusing on the development and evaluation of Med-PaLM.

Introduction

The medical field requires accurate, reliable, and safe information exchange. Traditionally, Artificial intelligence (AI) models have struggled to effectively utilize language in this domain, limiting their application in real-world clinical workflows. LLMs, however, offer a new opportunity. As foundation models, they can be adapted to various tasks and domains with minimal effort. Their expressive and interactive nature makes them particularly promising for medical applications like knowledge retrieval, clinical decision support, and patient triaging.

However, the safety-critical aspect of medicine necessitates thorough evaluation frameworks to measure progress and mitigate potential harms associated with LLMs, such as generating inaccurate medical information or incorporating biases.

MultiMedQA: A Medical Question Answering Benchmark

To evaluate LLM performance in a medical context, researchers curated MultiMedQA, a benchmark combining seven medical question-answering datasets, including a new dataset called HealthSearchQA. This benchmark encompasses:

Multiple-choice datasets like MedQA (USMLE-style questions), MedMCQA, PubMedQA, and MMLU clinical topics

Long-form answer datasets like HealthSearchQA, LiveQA, and MedicationQA

MultiMedQA allows researchers to assess various aspects of LLMs, including:

Factuality: Agreement of answers with current medical consensus.

Comprehension: Ability to understand and interpret medical information.

Reasoning: Ability to apply medical knowledge to draw conclusions.

Harm and Bias: Potential for answers to mislead or perpetuate harmful biases.

PaLM and Flan-PaLM: Baseline Models

The study utilized Google’s Pathways Language Model (PaLM) and its instruction-tuned variant, Flan-PaLM, as baseline models.

PaLM: Trained on a massive dataset of text and code, demonstrating impressive performance on various reasoning tasks.

Flan-PaLM: Further fine-tuned using instructions and examples, achieving state-of-the-art results on multiple benchmarks.

Researchers tested these models on MultiMedQA using various prompting strategies like few-shot, chain-of-thought (COT), and self-consistency prompting. Notably, Flan-PaLM achieved state-of-the-art accuracy on all multiple-choice datasets, even surpassing human performance on some.

Addressing Flan-PaLM Limitations: Instruction Prompt Tuning and Med-PaLM

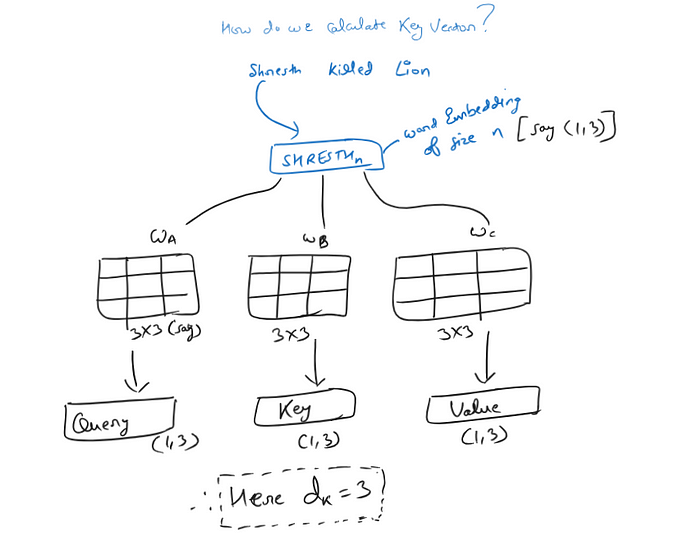

Despite strong performance on multiple-choice questions, Flan-PaLM revealed significant limitations when answering consumer medical questions, highlighting the need for further alignment with the medical domain. To address this, researchers introduced instruction prompt tuning, a novel approach to adapt LLMs to specific domains like medicine.

This technique builds upon prompt tuning, a parameter-efficient method for adapting LLMs to downstream tasks. Instruction prompt tuning involves prepending a learned soft prompt to the input, followed by the hard prompt (instructions and examples). This combination guides the model to generate more domain-specific and appropriate responses.

By applying instruction prompt tuning to Flan-PaLM with carefully curated examples and instructions from clinicians, researchers created Med-PaLM. This new model, specifically designed for the medical domain, demonstrated substantial improvements in:

Scientific Grounding: Aligning answers with current medical consensus.

Harm Reduction: Minimizing the potential for harmful advice.

Bias Mitigation: Reducing the inclusion of biased information.

Human Evaluation: Comparing Med-PaLM with Clinicians

To assess Med-PaLM’s performance in real-world scenarios, researchers conducted a human evaluation involving clinicians and lay users. They compared the answers generated by Med-PaLM and Flan-PaLM to those provided by clinicians on consumer medical questions.

Results

Clinician Assessment: Med-PaLM’s answers were significantly better than Flan-PaLM’s across all evaluated aspects, including scientific accuracy, harm potential, bias, and completeness…. However, clinician-generated answers remained superior overall.

Lay User Assessment: Med-PaLM was judged more helpful and relevant to user intent compared to Flan-PaLM, although it still fell short of clinician performance.

Key Observations:

Scaling Improves Performance: Larger language models like PaLM 540B consistently outperformed smaller ones, suggesting an inherent ability to encode and utilize medical knowledge.

Instruction Prompt Tuning is Crucial: This technique proved essential for aligning LLMs to the medical domain, resulting in safer, more accurate, and less biased responses compared to generic instruction tuning.

Future Directions and Challenges

This study highlights the potential of LLMs like Med-PaLM in revolutionizing medical information access and utilization. However, significant challenges remain:

Expanding MultiMedQA: Including more diverse medical domains, languages, and tasks that better reflect real-world clinical workflows.

Enhancing LLM Capabilities: Improving grounding in medical literature, uncertainty communication, multilingual support, and safety alignment.

Refining Human Evaluation: Developing more comprehensive and less subjective evaluation frameworks that consider health equity, cultural nuances, and diverse user needs….

Conclusion

The development of Med-PaLM showcases the potential of LLMs in assisting with medical question answering. While challenges remain, this research paves the way for future innovations in medical AI, ultimately aiming to create safer, more accessible, and equitable healthcare solutions. Continued research, collaboration between stakeholders, and careful consideration of ethical implications will be crucial for realizing the full potential of LLMs in medicine.